Background

Artificial intelligence (AI) is an umbrella term covering a wide array of technologies and applications, from predictive analytics in finance to autonomous robots in manufacturing, and from medical‐image analysis to language translation services. While definitions vary by discipline and use case, most agree on three core features: the ability to sense or interpret an environment; to learn from data; and to act with some degree of autonomy toward specific objectives. For example, the OECD (2024) defines an AI system as:

“a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.”

Given AI’s many forms and applications, no single, universal definition exists, so this description captures the essential elements where most definitions converge (Jones 2023; Zinnbauer 2025).

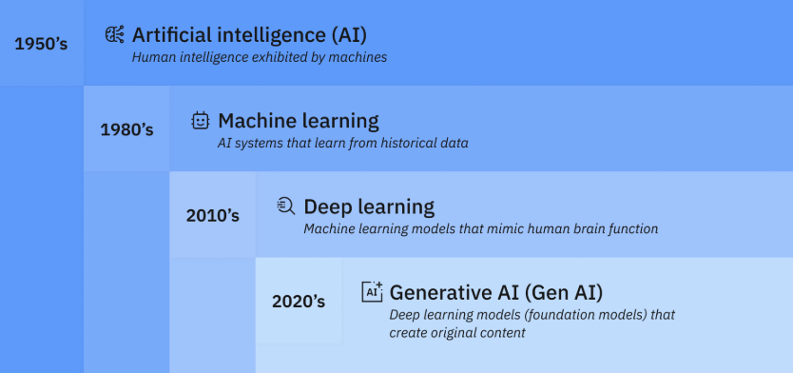

The field of AI dates back to the mid-twentieth century, with early work on symbolic reasoning in the 1950s, but its pace of innovation has markedly accelerated in the 2020s with the development of generative AI, thanks to advances in deep learning architectures (e.g. transformers), the explosion of digital data and growing computing power (Roy 2023; Zinnbauer 2025) (see Figure 1). This surge is particularly evident in the development of large foundation models and the technologies that build upon them, such as generative AI.d5869bb0771b These tools leverage unsupervised or semi-supervised trainingb2bb3af065b9 on vast unlabelled datasets to learn broad patterns before fine-tuning in relation to specific tasks (e.g. GPT-5, LaMDA). This represents a paradigm shift that has unlocked new capabilities in text, image, code and multimedia generation (Roy 2023; Jones 2023).

The dramatic growth in both private and public sector support for AI development reflects this momentum. In 2024, generative AI initiatives secured US$33.9 billion in global private investment, an 18.7% increase over 2023, underscoring firms’ eagerness to commercialise foundation models and related tools (Maslej et al. 2025).

Governments have likewise ramped up policy and regulation engagement. For instance, US federal agencies issued 59 AI-related regulations in 2024 – more than twice as many as in 2023 – spanning guidance on risk management, data governance and permissible uses of AI in critical sectors (Maslej et al. 2025), though many of these were later repealed in early 2025 by the Trump administration (Wheeler 2025).

Figure 1: The evolution of AI since the 1950s

Source: Stryker and Kavlakoglu 2024

The complex and evolving nature of corrupt practices can make AI-driven solutions appear attractive (Gerli 2024). Research shows that AI has been successful in some traditional anti-corruption areas, including procurement integrity and anti-money laundering, in both preventing and detecting corruption (Zinnbauer 2025). The ability of AI tools to process vast amounts of data, make connections across numerous datasets and learn from data to identify patterns indicative of corruption can aid in the identification of cases for targeted audit as well as the detection of high corruption risk procurement contracts (e.g. López-Iturriaga and Sanz 2018; Mazrekaj et al. 2024; Fazekas et al. 2023; Wacker et al. 2018; García Rodríguez et al. 2022). As such, AI anti-corruption tools (AI ACTs) have been increasingly adopted by governments and private sector organisations around the world. For instance, a bot called Alice has been developed by the Office of the Comptroller General in Brazil to help curb corruption in public procurement (Gerli 2024). For more details on Alice, see page 43 in Annex 1.

However, alongside its promise, AI also introduces significant challenges. First, data reliability and algorithmic bias can arise when models are trained on incomplete, unrepresentative or low-quality datasets, leading to unfair or inaccurate outcomes (Köbis et al. 2022a; Raghavan 2020, Transparency International 2025: 4). Second, inclusivity gaps persist because AI development teams and training data often lack diversity, which can amplify existing social inequities (Leech et al. 2024; Maslej et al. 2025; Transparency International 2025: 4). Third, information asymmetry and opacity in AI decision-making – commonly associated with “black-box” models – undermine transparency and accountability, making it difficult for practitioners and affected communities to understand or challenge AI-driven actions (Odilla 2023, 2024; Transparency International 2025: 4; Zinnbauer 2025). These issues – alongside others discussed in this Helpdesk Answer, such as ethical concerns about surveillance and consent – must be carefully managed if AI ACTs are to achieve their intended impact.

This Helpdesk Answer takes stock of emerging evidence in the academic and policy literature on the benefits and risks of AI ACTs and is structured as follows. The next section explains key AI technologies: machine learning (ML), deep learning, natural language processing (NLP), computer vision (CV) and generative AI (Gen AI). The section after that first introduces how AI can enhance existing anti-corruption work by being embedded into current workflows to augment rather than replace human judgement. The answer then surveys the existing evidence of benefits and risks of using AI ACTs for the prevention, detection and investigation of corruption. The Helpdesk Answer concludes with a discussion of broader challenges for deploying AI in anti-corruption efforts.

AI technologies

Although there are no clear-cut boundaries between different types of AI technologies, they can be generally divided into:

- machine learning (ML)

- deep learning

- natural language processing (NLP)

- computer vision (CV)

- generative AI (gen AI).

Machine learning (ML)

Machine learning (ML) uses algorithms that learn from large amounts of data to identify patterns and make predictions. ML-based models can improve their accuracy over time by autonomously optimising their performance, i.e. they evolve with experience (Syracuse University 2025).

There are many ML techniques or algorithms, chosen according to the problem and the nature of the data (e.g. regressions, decision trees, support vector machines (SVMs), clustering, neural networks) (IBM n.d.). Broadly, ML models fall into three categories:

- supervised learning: human experts label “training” data, which algorithms use to classify or predict outcomes on unlabelled data (IBM n.d.)

- unsupervised learning: does not require labelling because algorithms detect inherent patterns from unlabelled data to cluster the data into groups to inform predictions (IBM n.d.; Murel 2024)

- reinforcement learning: based on trial-and-error learning by systematic rewarding of correct output (IBM n.d.); decision-making is in the hands of autonomous agents which can make decisions in response to the environment without direct instructions from humans (e.g. robots and self-driving cars) (Murel 2024)

ML, along with advanced deep learning techniques, is widely used across domains, including recommendation systems (e.g. Amazon, Netflix), fraud detection in financial services (e.g. flagging unusual transactions) and personalised marketing (Mate 2025).

In anti-corruption, ML has been shown to be effective in numerous tasks, such as scanning large datasets (e.g. financial transactions, asset declarations and public contracting records) to detect money laundering networks and high corruption-risk areas in procurement processes (Harutyunyan 2023; Gandhi et al. 2024; Katona and Fazekas 2024; Fazekas et al. 2023). Supervised learning051c5b186a4d has been widely used in anti-corruption research and practice to, for example, detect cartels in public procurement contracting (Fazekas et al. 2023).

Deep learning

Deep learning is a subset of ML that uses multilayered neural networks designed to simulate the complex decision-making processes of the human brain (Stryker and Kavlakoglu 2024; IBM n.d.). These networks consist of an input layer, an output layer and multiple hidden layers of interconnected nodes in between, which allow for the automated extraction of patterns from large, unstructured datasets and the identification of relationships that support predictions (Stryker and Kavlakoglu 2024; Holdsworth and Scapicchio 2024; IBM n.d.).

Deep learning can also be applied in semi-supervised learning, where both labelled and unlabelled data are used to train models for classification tasks (Stryker and Kavlakoglu 2024). Common architectures of neural networks include (Holdsworth and Scapicchio 2024):

- convolutional neural networks (CNNs): designed to detect patterns within images and videos, widely used for pattern and image recognition

- recurrent neural networks (RNNs): typically applied to natural language and speech recognition as they use time-series data to predict outcomes

- generative adversarial networks (GANs): capable of creating new data resembling training data; for example, generated images appearing to be human faces

The most advanced AI applications, such as large language models (LLMs) powering chatbots, rely on deep learning (IBM n.d.). In the anti-corruption field, deep learning has been used, for example, in detecting collusion in procurement using CNNs (Huber and Imhof 2023).

Natural language processing (NLP)

Natural language processing (NLP) is a branch of AI that enables processing, understanding and responding to human language in text or speech form, dealing with large volumes of unstructured textual or spoken data (Syracuse University 2025; Mate 2025). In doing so, it combines ML and deep learning techniques with computational linguistics and rule-based modelling of human language (Stryker and Holdsworth 2024). Not all NLP techniques rely on ML as classical methods like “bag-of-words” are rule-based approaches that do not require training data (Dorash 2017; Stryker and Holdsworth 2024).

NLP typically involves four phases. First, it preprocesses text by transforming it to machine-readable forms (e.g. this can involve techniques such as tokenisation, lowercasing, etc.). Second, the text is transformed into numerical data with which a computer can work using different techniques, such as word embedding. Third, text analysis to interpret and extract meaningful information from text is conducted, including, for example, part-of-speech tagging, aimed at identifying the grammatical roles of words (Stryker and Holdsworth 2024). Finally, processed data is used to train ML models to learn patterns and relationships within the data, which can then be used to make predictions on unseen data (Stryker and Holdsworth 2024).

NLP powers language translation (e.g. Google Translate, DeepL), sentiment analysis (e.g. social media platforms), automated content moderation and virtual assistants (e.g. Siri) (Mate 2025; Dilmegani 2025). It also accelerates mining of information from documents across finance, healthcare, insurance and legal sectors (Stryker and Holdsworth 2024). In anti-corruption, NLP has been applied to analyse large document leaks (e.g. emails, legal documents, whistleblower reports, financial data, corporate ownership). For example, the European Anti-Fraud Office (OLAF) has used NLP techniques to detect suspicious patterns in email correspondence (European Parliament 2021).

Computer vision (CV)

Computer vision (CV) is an AI domain that uses ML and deep learning techniques, especially CNNs, to understand and interpret the content of visual information (i.e. images and videos) (Mate 2025; Syracuse University 2025). By analysing large datasets, CV learns to recognise features and distinguish one image from another (Holdsworth and Scapicchio 2024).

For example, in social media platforms, CV provides suggestions in relation to who might be in a photo posted online. In doing so, ML uses algorithmic models to enable computers to learn about the context of the visual data and learn to distinguish one image from another. A CNN helps ML or deep learning model in this process to “look” by breaking down images into pixels that are labelled to train specific features (IBM 2021; Holdsworth and Scapicchio 2024). The AI model then uses these labels to perform mathematical operations and ultimately predict what it sees and check the accuracy iteratively until the predictions meet expectations (Boesch 2023). The ultimate result is that computers can “see” (e.g. who is in the photo, what objects are on the road) and act based on those insights (Boesch 2023; Holdsworth and Scapicchio 2024).

One example of CV application is the camera lens function of Google Translate that can detect and translate different languages (Holdsworth and Scapicchio 2024). CV is applied in various industries, including energy, utilities, manufacturing and automotive (e.g. real-time object detection, lane following and object tracking in autonomous vehicles) (Holdsworth and Scapicchio 2024).

In the anti-corruption field, CV has been used for satellite data analysis to detect corruption in road construction and mining projects as well as object recognition in public works monitoring (López Acera 2023; Nicaise and Hausenkamph 2025).

Generative AI (gen AI)

Unlike other types of AI technologies, that analyse existing data, gen AI is designed to create new data or content based on a variety of inputs, including text, images, sound, animations and other types of data (Roy 2023). Gen AI models use deep learning neural networks for identifying patterns within the existing data to generate new content (Jones 2023).

Gen AI has broad applications across industries. For example, LLMs are one of the most popular applications of language based generative models that are being used for essay generation, translation and coding, to name a few of their uses (Nvidia n.d.). For instance, Open AI’s ChatGPT can be used to draft emails, write essays, code and other tasks, and its abilities keep evolving with the further development of foundation models (Open AI n.d.). For instance, a recent release of GPT-4.5 model reportedly improves its ability to recognise patterns, make connections and generate creative insights without reasoning, while hallucinatinga73542f7c931 less.

Crucially for anti-corruption, the past one to two years have seen a surge in open-source and open-weight models30ecd69962f8 that can run locally, offering strong task performance without sending sensitive data to external providers (IBM 2023b; AI21 Editorial Team 2025). Examples of open-weight models include Google’s Gemma 2, Mistral’s Mixtral 8x7B and Alibaba’s Qwen 2.5, while notable open-source models include Meta’s OpenLLaMA and TII’s Falcon Models (Mishra 2025). Local or private deployments enhance data control and confidentiality, allow fine-tuning with domain-specific datasets and can be tailored to narrow tasks (e.g., document classification, entity extraction) that are common in corruption investigations (NetAppInstaclustr 2025).

Gen AI has its potential uses in anti-corruption. For instance, an OECD survey of integrity actors (such as anti-corruption agencies, supreme audit institutions and internal audit bodies) suggests that they see LLMs as especially promising for anti-corruption efforts, particularly with regards to document analysis and text-based pattern recognition (Ugale and Hall 2024). Specifically, the surveyed institutions saw the greatest promise in using LLMs to improve operational efficiency and the analysis of unstructured data. In particular, they highlighted applications in investigations and audits, where anti-corruption bodies must process vast volumes of documents, reports and records (Ugale and Hall 2024). LLMs could assist in evidence gathering and document review by identifying irregularities, extracting relevant information, and flagging suspicious patterns that might otherwise go unnoticed (Ugale and Hall 2024).

General benefits and challenges of using AI in anti-corruption efforts

This section first situates AI within the broader evolution of information and communications technologies (ICT) that have been applied to anti-corruption efforts, ranging from early e-governance platforms and electronic procurement systems to today’s advanced analytics and predictive tools (Aarvik 2019; Zinnbauer 2025). It then outlines the key benefits and challenges of applying AI tools in anti-corruption contexts.

From digital technologies to contemporary AI systems in anti-corruption

Over the past two decades, the anticorruption field has increasingly adopted digital technologies to enhance transparency, citizen engagement and oversight (Zinnbauer 2025). Early initiatives focused on e-government systems and the digitalisation of public services, which streamlined administrative processes, reduced waiting times and were empirically shown to lower corruption risks (Shim and Eom 2008; Andersen 2009; Elbahnasawy 2014; Gurin 2014). In parallel, crowdsourcing platforms, whistleblowing portals and open‐data dashboards empowered citizens and journalists to monitor procurement, asset declarations and legislative votes in real time (Kossow and Dykes 2018; Kossow and Kukutschka 2017). More recently, blockchain has been piloted to create tamper-proof records of public contracts and financial flows, offering stronger audit trails (Hariyani et al. 2025).

Yet every wave of ICT innovation has brought its own risks. Digital channels can be exploited for illicit transactions on the dark web, crypto-based money laundering or the manipulation of citizen feedback systems (Adam and Fazekas 2018). Today, artificial intelligence represents the next frontier: AI systems can sift through millions of records to flag anomalous bids or suspicious transactions and automate facial recognition or satellite-image monitoring of public works. Early evidence suggests AI can significantly improve detection rates in public contracting and anti-money laundering efforts (Aarvik 2019; Zinnbauer 2025).

However, as with many previous technologies, AI also introduces challenges – such as data quality and bias, algorithmic opacity and uneven access to computing resources – that must be carefully managed to ensure these tools strengthen, rather than undermine, integrity and accountability.

General benefits of using AI in anti-corruption

Key features of AI systems that can be beneficial to anti-corruption efforts include:

Autonomous learning and task execution at scale: After initial programming and inputs from humans, AI systems can independently train on datasets to execute tasks that once required intensive human effort (Köbis et al. 2022a). Deep learning models using neural networks automatically detect complex patterns and relationships across millions of data points, enabling them to flag subtle corruption indicators that human analysts could miss. For example, research shows that AI ACTs can detect corruption risk zones and predict embezzlement by processing vast volumes of documents and records (Köbis et al. 2022a; López-Iturriaga and Sanz 2018; de Blasio et al. 2022).

Leveraging advanced computing power: modern AI thrives on the exponential growth in computing capacity, which allows it to ingest and process massive datasets in near real time (OECD 2025). This makes it possible to track complex corruption schemes such as the use of shell company networks, aggregate data from disparate sources and run predictive analytics that highlight where bid rigging or embezzlement is most likely to occur (Köbis et al. 2022a; OECD 2025). High-performance computing also enables rich and detailed visualisations of complex financial flows to uncover hidden relationships and money trails (OECD 2025).

Fewer human interventions: unlike human decision-makers, AI, in principle, operates without personal interests, theoretically limiting opportunities for conflicts of interest (Köbis et al. 2022a). By automating decision flows and removing discretionary human steps, AI reduces the number of interactions where petty corruption can occur (Köbis et al. 2022a). However, this reduction in human involvement also means fewer natural audit checks: if an AI system is compromised – through biased training data, for example – there may be no human operator to notice or correct it. In such cases, a corrupted AI could systematically misclassify or misallocate resources at scale before anyone realises something is wrong (Köbis et al. 2022b; Zinnbauer 2025). Thus, while fewer human interventions lower opportunities for traditional corruption, they heighten the importance of robust oversight, continuous monitoring and transparent algorithmic audit trails to detect and respond to any AI system failures or manipulations (Kossow et al. 2021).

Capability to integrate novel and disparate data sources: AI can potentially detect instances of corruption that would be hard to uncover using traditional research methods and tools. For instance, AI systems can leverage large geospatial datasets and satellite imagery to expose corrupt practices in hard-to-reach areas. Deep learning models, for example, have been used to spot illegal road construction linked to deforestation, monitor unauthorised mining and map land grabs by using satellite imagery and deep learning (Labbe 2021; Hausermann et al. 2018; Laurance 2024). These capabilities boost transparency and can empower civil society and local watchdogs with micro-level detail on where illicit activities are occurring, even in remote areas (Zinnbauer 2025; Labbe 2021).

General challenges of using AI in anti-corruption

Key general challenges include:

Data reliability and bias: AI-driven anti-corruption tools are only as good as the data they consume, yet many datasets used in anti-corruption contexts are often incomplete, inconsistent or biased (Köbis et al. 2022a; Zinnbauer 2025). Biases may result from poor quality data (missing values, errors from the data gathering stage) or personal biases of those involved in developing AI systems (Odilla 2024). Both academic studies and public policy AI ACTs analysed in this Helpdesk Answer note the issue of limitations in public expenditure data like public procurement (Fazekas et al. 2023; Katona and Fazekas 2024) and corruption investigation/conviction data (Gallego et al. 2021). These limitations, which include missing data, interoperability challenges, difficult-to-process data formats, as well as deeper concerns like the “selective labelling problem”bbd4efc0b72a (see Gallego et al. 2021; Gallego et al. 2022) negatively affect the reliability of AI-driven tools for identifying corruption risks.

For instance, the project MARA in Brazil, which uses ML to develop individual level corruption scores for civil servants based on previous conviction data, has faced criticism that it simply reinforces existing patterns and biases (Nicaise and Hausenkamph 2025). This is because it was trained only on individuals who were caught and punished, which may exclude undetected corrupt behaviour and focus disproportionately on those civil servants from agencies with robust internal oversight, which consequently overlook broader systemic corruption (Nicaise and Hausenkamph 2025; Köbis et al. 2022a; Odilla 2024). Data poisoning – deliberate tampering with training data by bad actors – poses another concern, especially for systems that rely on continuously updated online data (Ugale and Hall 2024: 45).

Therefore, it is important to invest in transparency initiatives aimed at ensuring the maintenance of high-quality, unbiased, open government and open data repositories (Zinnbauer 2025: 15). The experience of the Tribunal de Contas (TdC) in Portugal in using AI for developing audit risk models offers several useful lessons on how to improve data quality. First, enhancing data validation mechanisms, which may involve automating the cross-referencing of datasets with other external sources to automatically flag inconsistencies before they can affect the analysis. Second, enforcing data quality and consistency standards across public sector institutions to enhance more efficient use of such data (Hlacs and Wells 2025: 22).

Algorithmic challenges and capture: even the most sophisticated AI models can produce false positives (e.g. wrongly flagging innocent officials) and false negatives (e.g. failing to detect actual corruption). False positives risk reputational harm if AI-generated risk scores or alerts are made public without human verification (Köbis et al. 2022a). Conversely, false negatives can erode trust in AI tools: if a large corruption scandal slips through automated checks, the public may suspect that those in power have tampered with the AI system to hide wrongdoing (Köbis et al. 2022a).

At the broader level, the weaknesses in the training data can compound blind spots (false negatives). If corruption is already under-detected in transaction data and AI models are trained only on the limited cases that are identified, the models will become good at detecting those identified positive cases. However, this also reinforces the blind spots, making it harder for the system to detect the many cases that went unnoticed in the first place.

Transparency International (2025) has proposed the concept of “corrupt uses of AI” to cover instances in which AI systems are abused by entrusted powerholders for private gain. This could involve officials trying to game the system. For example, if an official knows what type of behaviour or data would be flagged as a “corruption risk”, they could pass on or sell this information to people to help them avoid these supposedly systematic and comprehensive checks. Rather than gaming the system, manipulations can also involve influencing the design of the AI system itself. For instance, algorithmic capture can occur in e-procurement as a one-time manipulation of the AI system by power holders, resulting in narrow politically connected interests reaping long term benefits (Köbis et al. 2022b: 8).

Need for human oversight: effective deployment requires the right balance between AI autonomy and human oversight (Köbis et al. 2022a). Many of the international standards and principles of using AI that have been emerging focus on this aspect. For example, a recently published AI Playbook for the UK Government (2025) calls for a meaningful human control at the right stages, including ensuring that humans validate any high-risk decisions influenced by AI (Principle 4). Governance frameworks – such as the OECD AI Principles and the EU AI Act – also mandate human oversight and thresholds for AI autonomy in high‐risk contexts, reinforcing that AI should augment, not replace, human decision-making (OECD 2019; EC 2024).

Institutional readiness and regulatory lag: AI effectiveness in anti-corruption contexts critically depends on the broader institutional, legal and oversight environment in which these AI systems operate (Ubaldi and Zapata 2024). In many jurisdictions, these enabling conditions lag far behind the pace of AI innovation, particularly with the rapid deployment of gen AI since 2022. National and supranational stakeholders have warned that the absence of clear governance frameworks, procurement standards and operational guidelines increases risks of misuse, bias and rights violations (OECD 2019; Ubaldi and Zapata 2024). For example, the EU’s AI Act (2024) treats many AI tools as high-risk, thereby obliging AI providers to establish a risk management system, conduct data governance (e.g. ensuring that training, testing and validation datasets are sufficiently representative) and allow for human oversight, among other requests (Future of Life Institute 2024). Yet, in many developing countries, such safeguards are absent or only partially implemented, meaning that AI systems can be deployed without adequate accountability mechanisms in place.

Inclusivity gaps: AI systems’ development and training datasets often lack diversity, reinforcing the existing digital divide, which can flow into data, model design and deployment. Women and marginalised communities remain underrepresented among AI professionals, and there is a lack of high-quality training data representing these groups (Zinnbauer 2025). For instance, women are estimated to make up only 29% of AI professionals (Constantino 2024). Moreover, while AI and computer science education is expanding, gaps in access persist as it remains limited in many African countries due to existing infrastructure challenges, including issues as fundamental as access to electricity (Maslej et al. 2025). Considering that most current AI systems are controlled by commercial actors in the Global North (Laforge 2024), they are unlikely to be tailored to the needs of the Global South (Zinnbauer 2025: 15). This increases the risk of discriminatory outcomes and has implications for anti-corruption efforts (Raghavan 2020; Leech et al. 2024; Maslej et al. 2025). For example, when AI tools are trained on historical “sanctions” or enforcement data, they can reproduce who was already visible to oversight and who was not, thereby amplifying disparities (see Odilla 2024). At the same time, “low-data environments” – common in many countries in the Global South where governance data is patchy – pose additional challenges, since this increases the likelihood that AI ACTs will be trained and rely on datasets with significant gaps or biases (for example, official data that primarily reinforces government narratives) (Foti 2025). This underscores the need to prioritise local data and triangulate information, while strengthening human oversight and ensuring transparency by involving independent experts and civil society actors in AI tool development (Foti 2025).

Information asymmetry and opacity: many AI models, especially deep learning, are “black boxes”, producing outputs without transparent explanations (Köbis et al. 2022b: 10). This opacity is compounded by the fact that both the training datasets and the model architectures are often proprietary – created and maintained by private, for-profit vendors – placing them beyond the reach of public scrutiny, although the quality gap between closed and open models have reportedly been shrinking (Pillay 2024). Moreover, this raises acute accountability concerns: if an AI tool generates erroneous or unfair outcomes, it is unclear who should be held accountable: the private developer whose opaque model produced the error or the government body that chose to integrate and act upon those outputs? (see Sanderson et al. 2023).

On the one hand, the opacity arises from the technical nature of the models, which makes it inherently difficult to interpret results. On the other, it is exacerbated by deliberate secrecy by governments about the AI systems they deploy, as seen with SyRi in the Netherlands and the “Zero Trust” programme in China (Algorithm Watch 2020; Borgesius and van Bekkum 2021; Chen 2019). The challenge of interpretability and explainability2441370fb1b2 is also evident to LLMs, considering the breadth and variety of data fed into these models (Ugale and Hall 2024). Therefore, it becomes challenging to trace the connection between input data and the outputs of these models, making it more difficult for citizens to understand how a decision was made and to appeal those decisions to protect their own rights and interests (Ugale and Hall 2024: 41). Although there are no easy solutions to these challenges, Ugale and Hall (2024: 41) note that governments have explored the use of decision trees to illustrate the link between the AI systems’ results and explanations of how they were reached and have issued explainable AI toolkits, while some academics have introduced a taxonomy of explainable techniques for LLMs (Berryhill et al. 2019; Zhao et al. 2024; Government of the Netherlands 2024).

Without clear governance frameworks, public administrators may be tempted to defer liability to vendors, while developers may evade responsibility by invoking technical complexity. The EU AI Act (2024) and OECD’s AI Principles (2019) both emphasise the need for transparency, explainability and shared accountability, requiring that high-risk AI systems include human controls, detailed documentation of training data and mechanisms for contesting decisions (EC 2024; OECD 2019, 2025).

AI and anti-corruption

This section first discusses how AI can enhance existing anti-corruption work by being embedded into current workflows to augment rather than replace human tasks and judgement. In practice, the most common, and immediately valuable, applications are the mundane2d0e228952d6 ones: using AI (especially LLMs) to automate repetitive tasks at scale. The section then reviews empirical evidence on the use of AI in the prevention, detection and investigation of corruption. For prevention and detection, it examines key themes, the AI technologies applied, central findings and the main benefits and challenges, drawing on academic studies and public policy applications. For investigations, where far fewer applications exist, the discussion focuses on the potential benefits and challenges of using AI tools, particularly in evidence gathering and document analysis.

“Mundane” uses of AI technologies in anti-corruption efforts

AI is proving valuable for governments, private firms and non-profits by automating “mundane” tasks that would otherwise consume scarce staff time or be virtually impossible to accomplish due to the vast volumes of data involved. With AI (particularly LLMs), prompts can be adjusted without building complex new programming pipelines, teams can quickly add stages to the existing pipeline and achieve strong accuracy on routine work that would be prohibitively time-consuming – or practically impossible – to perform manually at this scale. Typical examples range from translation, copy editing and project management tasks to converting PDFs into spreadsheets or structured tabular formats, classifying large datasets and extracting names, roles, dates and entities from unstructured text.

As noted later in this Helpdesk Answer, Transparency International UK’s (n.d.) use of AI to classify lobbying records transformed vast records of lobbying meetings into an analysable dataset, freeing researchers to focus on substantive insights rather than manual data collection (for practical uses of this dataset see: Whiffen 2025).

Similarly, the Transparency International Global Health Atlas aggregates and structures diverse information on corruption in the health sector, creating a reusable evidence base for investigations, advocacy and policy design (Transparency International Global Health 2025). These initiatives have demonstrated the usefulness of LLMs, ML and NLP based techniques to turn vast amounts of information into structured and regularly updated datasets.

Box 1. Civil society uses of AI in anti-corruption efforts: The experience of Transparency International UK

For several years, Transparency International UK has moved beyond theoretical work to deploy practical AI tools that turn vast, complex datasets into actionable evidence addressing public integrity challenges, from lobbying and conflicts of interest to public procurement corruption. In doing so, they have applied a range of AI techniques, including ML, NLP and gen AI.

Their Health Atlas project (discussed in the following section) uses LLMs in a multi-stage classification process. The system identifies articles relevant to corruption, classifies the specific type of corruption (e.g., bribery), and extracts key metadata like location and date, creating the world’s largest repository of evidence on health corruption. In the project's early stages, they also analysed the data (using BERT sentiment analysisf64fa5358733) to distinguish between reports of corruption and articles detailing anti-corruption efforts.

Building on these capabilities, TI UK developed Tomni AI, a versatile platform that integrates retrieval-augmented generation. This allows users to ask natural language questions of vast document sets. This system uses a hybrid approach, combining vector-based semantic search with traditional keyword search (BM25) for accuracy, before an LLM synthesises the retrieved information into a coherent answer. Tomni AI is also designed as an agentic AI system, where a researcher can define a customed sequence of tasks – such as semantic chunking, data enrichment with user-defined red flags (e.g. extract when suspicious transactions are noted and their value), and named entity and relationship extraction (to automatically populate network maps from vast PDFs/leaks/investigations) – for the system to execute automatically on unstructured data.

AI and prevention of corruption

Key themes

The potential of AI technologies in preventing corruptioncec95910311d has been tested primarily in two broad thematic areas:

- predicting corruption risks and corruption “hotspots” in the allocation and disbursement of public funds (Huber and Imhof 2023; Gallego et al. 2021; Decarolis and Giorgiantonio 2022; Rodríguez et al. 2022; Fazekas et al. 2023; Katona and Fazekas 2024; Mazrekaj et al. 2024)

- deterring lower-level corruption in the public sector (Odilla 2023; Köbis et al. 2022a; TNRC 2024).

Academic studies and public policy applications of AI ACTs demonstrate the potential of AI to identify corruption risks in public procurement contracting (e.g. Gallego et al. 2021; Odilla 2023; Katona and Fazekas 2024). Research also shows AI can effectively detect cartels and collusion in public contracting (e.g. Huber and Imhof 2023; Fazekas et al. 2023). Furthermore, empirical studies and public policy deployments highlight AI’s potential to uncover conflicts of interest, either by identifying politically connected firms (Mazrekaj et al. 2024) or by classifying lobbying meetings and health corruption cases into various categories (Transparency International Global Health 2025; Transparency International UK n.d.).

AI has also been applied to simplify administrative procedures and improve public awareness of public service delivery, thereby reducing opportunities for petty corruption. A notable example is Justina del Mar, an NLP based chatbot developed by WWF Peru. It provides artisanal fishers and shipowners with instant, reliable answers about inspections, licensing, sanctions and reporting channels (WWF 2024). Built from phone surveys and consultations that mapped common questions and knowledge gaps then systematised with regulatory texts and information obtained from authorities, the tool provides plain-language guidance via simple menus or natural language queries (WWF 2024). By putting accurate rules and reporting channels directly into users’ hands, it is intended to reduce opportunities for petty bribery or extortion tied to information asymmetries and to strengthen transparency and trust between communities and authorities (WWF 2024).

AI technologies in corruption prevention

Most academic studies and public policy applications rely on machine learning (ML) techniques, ranging from traditional regressions to deep learning models (e.g. Fazekas et al. 2023; Gallego et al. 2022; García Rodríguez et al. 2022; Mazrekaj et al. 2024). Existing academic studies often employ linear models, such as lasso, and tree-based ensemble models, like random forests, while fewer use neural networks (e.g. López-Iturriaga and Pastor Sanz 2018).

Some initiatives also use natural language processing (NLP) techniques, which were shown to be especially useful in generating novel corruption risk indicators based on textual data from public procurement notices (e.g. Katona and Fazekas 2024). Moreover, Transparency International UK has been using gen AI (LLMs) for their projects on classifying lobbying meetings (Annex 1, Example #13) and healthcare related corruption stories (Annex 1, Example #12).

Across approaches, trade-offs emerge: interpretable models (e.g. traditional statistical models) facilitate transparency and policy adoption, while more complex black-box models (e.g. neural networks) can increase predictive performance but risk resistance from oversight bodies due to their opacity.93e32c714552

Central findings

Evidence from academic studies and practical deployments analysed in this Helpdesk Answer suggests that AI ACTs show promising results in preventing corruption. Most academic studies report that their models achieve high predictive performance in identifying corruption “hotspots” in public procurement, detecting collusive bidding patterns and flagging politically connected firms with the potential of predicting conflicts of interest (e.g. Katona and Fazekas 2024; Fazekas et al. 2023; Mazrekaj et al. 2024; Huber and Imhof 2023).

For instance, using NLP and ML algorithms to analyse the text in tender documents lifted single-bid prediction22ec0729f749 from 77% to 82% in Hungary (Katona and Fazekas 2023), while ML models correctly predict over 85% of politically connected firms in the Czech Republic based solely on firm-level financial and industry indicators (Mazrekaj et al. 2024).

Key benefits and challenges

The key advantage of AI technologies for corruption prevention lies in their superior efficiency and scalability compared to traditional audits. By prioritising corruption risk “hotspots” for targeted audits, authorities can allocate their finite oversight resources more efficiently (Katona and Fazekas 2024; Fazekas et al. 2023). Complex models – such as gradient boosting or neural networks – can uncover subtle, non-linear patterns in procurement or financial data that simple rule-based systems or human auditors may miss (Chen 2019; Gallego et al. 2021). Predictive scoring also enables authorities to intervene before contracts are awarded, reducing losses from corrupt practices, such as in the case of the Alice tool in Brazil (Odilla 2023). Moreover, AI tools can integrate heterogeneous data sources – structured procurement records, corporate ownership registries, social network mappings and unstructured text – to create richer and more robust corruption risk profiles and identify more complex collusion and conflicts of interest patterns (Aarvik 2019; García Rodríguez et al. 2022; Mazrekaj et al. 2024; Zinnbauer 2025).

However, challenges persist. First, the opacity of black-box models such as deep neural networks limits interpretability, which can undermine public trust (Chen 2019). Second, there are still significant challenges with data quality, especially with regards to public procurement datasets (Katona and Fazekas 2024; Fazekas et al. 2023). Incomplete, inconsistent or biased datasets can produce misleading results, amplifying false positives or negatives. This is especially problematic with the use of corruption investigation/conviction data, which is prone to a “selective labelling problem” (see Annex 1, Example #9 below) (Gallego et al. 2021). Third, corrupt actors may adapt their behaviour once risk indicators become known, requiring periodic retraining with updated data to maintain model effectiveness (Fazekas et al. 2023). Finally, there are resource and capacity gaps: building and sustaining these systems requires technical expertise and institutional infrastructure that may be lacking in some public agencies. In citizen-facing tools, such as Justina del Mar in Peru, basic digital literacy among end-users is also a prerequisite for effective use (TNRC 2024).

For a list of examples of the application of AI ACTs in corruption prevention, see Annex 1.

AI and detection of corruption

Key themes

The potential of AI technologies in detecting corruption has been tested in two broad thematic areas:

- strengthening public sector accountability and transparency by leveraging and connecting diverse political integrity datasets (Odilla 2023; Harutyunyan 2023; Chen 2019; Transparency International Ukraine 2025; Bosisio et al. 2021; Wacker et al. 2018; Gandhi et al. 2024)

- using satellite imagery to detect corruption risks linked to environmental harm (WWF 2023; Wageningen 2023; Hillsdon 2024; Labbe 2021; Paolo et al. 2024; Zinnbauer 2025)

In the first area, academic studies and public policy applications demonstrate AI’s potential to detect corruption risks associated with firms and individual public officeholders. Tools focused on individuals have scrutinised asset declarations (Harutyunyan 2023), flagged wasteful spending (Odilla 2023) and detected conflicts of interest or irregular financial movements (Chen 2019). Firm-focused applications have assessed corruption risks in public procurement contracts (Transparency International Ukraine 2025), flagged corporate ownership anomalies (Bosisio et al. 2021), detected fake suppliers (Wacker et al. 2018) and identified money laundering patterns (Gandhi et al. 2024).

For instance, the Tweetbot Rosie de Serenata was created to analyse reimbursement claims submitted by members of Brazil’s congress (Köbis et al. 2022a). Originating from the grassroots anti-corruption initiative Operação Serenata de Amor (Operation Love Serenade), Rosie processes official spending data and flags suspicious expenses, such as cases where a congress member appears to have been in two locations on the same day and at the same time (Cordova and Gonçalves 2019). When a suspicious transaction is detected, Rosie automatically posts the finding on X, inviting citizens and legislators to confirm or refute the suspicion (Cordova and Gonçalves 2019).

In the second area, AI combined with satellite imagery has been used to detect illegal deforestation (WWF 2023; Wageningen 2023; Hillsdon 2024), illegal mining (Labbe 2021) and illicit fishing activities (Paolo et al. 2024), among its other uses (see Zinnbauer 2025). For instance, Forest Foresight, developed by WWF-Netherlands with commercial and academic partners, has demonstrated the ability to predict illegal deforestation up to six months in advance with 80% accuracy in pilot projects in Gabon and Borneo (WWF 2022).

AI technologies

Most public policy applications of AI ACTs and academic studies analysed in this Helpdesk Answer targeting corruption risks among public officeholders and firms use ML algorithms (Odilla 2023; Chen 2019; Transparency International Ukraine 2025). In contrast, applications based on satellite imagery typically use deep learning neural networks (CNNs) for image classification (Wacker et al. 2018; Paolo et al. 2024). The former typically favour relatively interpretable models (e.g., random forests and regularised linear/logistic models) that allow feature-level explanations and audit trails, whereas CNN based image classifiers are higher-accuracy but comparatively more opaque.

Some public policy applications combine NLP techniques with ML and deep learning neural networks, for example, for text pattern recognition. An example is Inspector AI in Peru, which uses NLP to process suspicious transaction reports (GIZ 2024; European Parliament 2021; Gerli 2024; Nicaise and Hausenkamph 2025).

Central findings

Evidence from public policy applications of AI ACTs and academic studies show promising results in AI’s ability to detect corruption, although some public policy applications are still in their early stages or are currently undergoing further development.

Civic-tech platforms such as Rosie (Brazil) (Odilla 2023; Operação Serenata de Amor n.d.), and DOZORRO (Ukraine) (see Box 2) have helped prevent inefficient spending, blocked corruption-prone contracts before signature and uncovered conflicts of interest among public officials. AI ACTs have also enabled civil servants to detect corruption risks faster by automating processes, such as extracting information from suspicious transaction reports in Peru (GIZ 2024) and automating the process of early detection of illegal deforestation (e.g. WWF 2023; WWF Ecuador 2025). Other deployments, like China’s Zero Trust programme, have demonstrated large-scale anomaly detection capacity by cross-referencing extensive government datasets, although this has raised privacy concerns (Odilla 2023; Chen 2019; Transparency International Ukraine 2025).

Academic studies further show AI’s potential to detect corruption, for example, by detecting potentially illegal fishing activity by mapping industrial fishing vessels that are not captured by public monitoring systems (Paolo et al. 2024) or by detecting fake suppliers in public contracting (Wacker et al. 2018).

Box 2. Countering public procurement corruption with DOZORRO in Ukraine

DOZORRO is a feedback platform integrated with Ukraine’s centralised public procurement database, Prozorro, established in 2016, which uses machine learning to monitor public procurement activities (Strawinska n.d.; Kucherenko 2019). It is an AI-powered system of civic monitoring over public procurement in Ukraine developed by Transparency International Ukraine (2018). Unlike systems that rely on exhaustive lists of corruption risk indicators – an approach vulnerable to gaming of indicators or manipulation by corrupt officials – DOZORRO’s software was designed to avoid such predictability (Transparency International Ukraine 2018).

In 2018, 20 experts reviewed approximately 3,500 tenders without access to the amounts or names of procuring entities, simply indicating whether each tender appeared risky. Their assessments were then used to train the AI algorithm (Transparency International Ukraine 2018). Today, the system independently evaluates the likelihood of corruption risks in tenders and forwards high-risk cases to civil society organisations within the DOZORRO network. The model “remembers” correct classifications and “forgets” incorrect ones, enabling continuous refinement over time (Transparency International Ukraine 2018; Aarvik 2019).

DOZORRO has proven effective in preventing inefficient spending and identifying high corruption risk contracts in Ukraine (Open Stories 2021; Transparency International Ukraine 2025a).For instance, in July 2025, the DOZORRO team analysed 165 procurement procedures worth over UAH 10 billion (approx. €207 million) and identified violations in 89 tenders, with inflated pricing the most common issue (Transparency International Ukraine 2025b). Through DOZORRO’s requests to procuring entities and its cooperation with law enforcement in the first half of 2025, inefficient spending of UAH 133 million (approx. €2.7 million) was prevented (Transparency International Ukraine 2025b).

Key benefits and challenges

The key benefit of deploying AI technologies for corruption detection is the ability of AI ACTs to detect risky tenders (Transparency International Ukraine 2025), fake bidders (Wacker et al. 2018), suspicious financial transactions that could indicate money laundering (Gandhi et al. 2024) or conflicts of interest of public officials (Chen 2019; Harutyunyan 2023) before money moves. In addition, these tools can detect environmental challenges such as illegal deforestation (WWF 2023; WWF Ecuador 2025) before environmental degradation occurs, enabling early audits and inspections.

These tools use NLP techniques, ML and deep learning algorithms to sift through and connect heterogenous datasets at a high scale and speed to look for asset declaration discrepancies (Harutyunyan 2023), procurement suppliers’ corruption risks (Hlacs and Wells 2025), financial transaction data to identify money laundering patterns (Gandhi et al. 2024) or satellite data to identify potential illegal fishing (Paolo et al. 2024), mining (Labbe 2021) or deforestation (WWF 2023). This can free up human resources to focus on high-risk cases.

Documented impact of these tools includes reduced meal reimbursement spending by approximately 10% after the launch of Rosie in Brazil (Odilla 2023; Operação Serenata de Amor n.d.), twice as many cases referred to prosecutors with Peru’s Inspector AI (GIZ 2024) and ranger interventions guided by Forest Foresight risk maps (WWF 2023). This is evidence that AI can translate into concrete enforcement outcomes.

Challenges are, however, significant. As with prevention, data quality remains a central obstacle: incomplete, inconsistent or biased datasets undermine reliability (European Parliament 2021; Gandhi et al. 2024; Paolo et al. 2024; Hlacs and Wells 2025).

Further, the use of high-performance but less-explainable models (e.g. based on neural networks like CNNs) is even more present in detection compared to prevention (see examples below), which carries a risk of public backlash or institutional resistance (Chen 2019). The stakes are also significantly higher in detection: unlike prevention models, which often highlight broad risk patterns or “hotspots,” detection tools focus on specific individuals, firms or entities. As a result, errors can have grave consequences: false positives may wrongly implicate officials or companies, damaging reputations, prompting lawsuits or even leading to unjust legal action, while false negatives can allow serious corruption to go undetected. This combination of opacity and high-stakes outcomes makes explainability, transparency and careful human oversight particularly critical at the detection stage.

There are also operational and capacity challenges. The benefits of AI tools depend on embedding them into workflows (case management, audit triggers), sustained funding and skills to retrain/monitor models, plus on-the-ground capacity to act on alerts (e.g., deforestation hotspots) (WWF 2023).

Corrupt actors may also adapt their behaviour once risk indicators become known, requiring regular model retraining against evolving corrupt patterns (see Fazekas et al. 2023).

Finally, some corruption detection tools raised serious privacy concerns, such as SyRi in the Netherlands (Algorithm Watch 2020; Borgesius and van Bekkum 2021) and the Zero Trust programme in China (Chen 2019), due to secrecy of the algorithm used, a lack of transparency on how private data is handled and linkage of vast data sources.

For a list of examples of the application of AI ACTs in corruption detection, see Annex 2.

AI and corruption investigations: Potential, benefits and challenges

Compared to prevention and detection, investigative AI based tools are less common. According to some recent surveys (Ugale and Hall 2024), real-world applications are still limited, particularly in the public sector, and the return on investment remains unclear.

Nonetheless, the potential of AI technologies in corruption investigations is increasingly recognised. For instance, in its 2024 report, the European Public Prosecutor’s Office highlights that its digital operations team initiated the Operational Digital Infrastructure Network programme to develop digital tools that would strengthen their investigators’ capacities by using artificial intelligence and big data analysis (EPPO 2025). Similarly, the European Investment Bank’s investigations division engaged with international investigators in 2024 to explore the use of AI in investigations (EIB 2025).

A recent OECD survey of 59 organisations across 39 countries (Ugale and Hall 2024) found strong interest in generative AI (particularly LLMs) for anti-corruption work. Respondents highlighted their potential to make auditors’ and investigators’ work more efficient by automating time-consuming tasks and allowing staff to focus on activities requiring human judgement and expertise (Ugale and Hall 2024: 15). Surveyed integrity actors identified the greatest value of LLMs in the areas of investigative and audit processes as relating to evidence gathering and document review (Ugale and Hall 2024: 17). Specifically, LLMs could help investigative work by organising large volumes of text for easy prioritisation and consumption and help in pattern recognition. Indeed, several AI tools have already been deployed in major corruption investigations (see Box 3).

Box 3. AI in corruption investigations: from Operation Car Wash to Rolls Royce bribery cases

In Brazil, the ContÁgil system, a data retrieving and data analysis tool for identifying fiscal fraud and money laundering, developed by the Special Secretariat of Federal Revenue of Brazil (RFB) was used in one of the largest corruption investigations in Brazil, the Operation Car Wash (Lava Jato), to identify complex networks of intermediaries and shell companies and connecting them with politicians and businessmen (Jambeiro Filho 2019; Odilla 2023: 378). It uses data such as asset declarations, ownership registers and tax payments, among other sources (Odilla 2023: 363).

Its resources include an ML environment with supervised learning algorithms and algorithms for clustering, outlier detection, topic discovery and co-reference resolution (Jambeiro Filho 2019). ContÁgil also has a social network analysis tool, which can visualise networks between people and companies based on scanning various data sources (Jambeiro Filho 2019).

The tool increases efficiency dramatically as it reportedly accomplishes in one hour what would take a human inspector a whole week (Jambeiro Filho 2019). The tool however, also exhibited some challenges, such as alert overflow, false positives, slow adaptability to new forms of wrongdoings and limited auditability, among other challenges (Odilla 2023: 372).

AI has also been used by the Serious Fraud Office (SFO) in the UK. In 2018, the SFO hired an “AI lawyer” tasked with automatically analysing documents (Martin 2018). The SFO previously used similar technology developed by a Canadian firm to spot legally privileged information among 30 million documents during the four-year long Rolls Royce bribery and fraud investigation (Martin 2018; Royas 2017; López Acera 2023). The SFO noted that the technology was 80% cheaper than hiring outside counsel to review documents and identify legally privileged information (Martin 2018).

The OpenText Axcelerate, an AI-driven tool, not only flags legally privileged materials but scans and organises information from various data formats and document types, displaying relevant information for investigation (Martin 2018). However, a recent report highlighted several challenges associated with this and similar tools. In February 2025, SFO noticed that searches did not return expected results as number of documents were omitted due to formatting issues, which required reconfiguration of the software, demonstrating the need for regular maintenance of AI tools (Fisher 2025: 75; Herbert Smith Freehills Kramer n.d.; Ring 2025).

A more serious concern relates to lawyers’ recently questioning of SFO’s evidence disclosure software, which could undermine historical convictions (Ring 2024). Specifically, SFO is investigating its legacy software tools (its old provider Autonomy Introspect and current Axcelerate system) that were used on dozens of cases to evaluate how it recognised punctuation, and it is reviewing the encoding issue which may have interfered with document searches (Ring 2024). Lawyers have been asking for clarity from prosecutors about how these issues may have affected current and past cases in terms of disclosure (Ring 2024).

While integrity actors considered document analysis and text-based pattern recognition the most valuable uses of LLMs for anti-corruption and anti-fraud investigations, they reported few advanced initiatives (Ugale and Hall 2024: 7). According to the survey, the actors who use LLMs either rely on turnkey foundation modelseb452a85fbeb developed by private firms or adapt existing models by fine-tuning them with specific datasets for targeted tasks (Ugale and Hall 2024: 8).

Broader challenges and implications of AI in anti-corruption efforts

Addressing risks of potentially harmful uses of AI systems

As highlighted in previous sections, including the Zero Trust programme in China, many AI ACTs involve centralised government control over sensitive data. This raises significant risks of surveillance, political capture and consolidation of power by political officeholders and tech companies at the expense of public interest (Köbis et al. 2022b; Köbis 2023). Similar concerns have been raised about the consolidation of power in the hands of small number of highly influential tech companies developing AI systems, who “are likely to steer the technology in a direction that serves their narrow economic interests rather than the public interest” (von Thun 2023).

In such contexts, AI systems may even be deliberately abused by power holders for private gain at the expense of the public interest through the intentional design of AI systems for corrupt purposes, manipulation of training data or corrupt application of otherwise legitimate tools (Köbis et al. 2022b: 7). Transparency International (2025: 3) has sought to draw attention to these risks through its advancement of the concept of “corrupt uses of AI”, defined as the abuse of AI systems by entrusted powerholders for private gain. Commercial vendors can also exacerbate these risks by promoting opaque, top-down solutions that create vendor lock-in,8e1892987ead inefficiencies or undue influence (Köbis et al. 2022a).

A first line of defence lies in ensuring algorithmic transparencyd79cefb309f5 and accountability, recognising that algorithmic systems are far from being neutral forms of technology (Zerilli et al. 2019; Jenkins 2021). Kossow et al. (2021: 11) highlight two key goals of algorithmic transparency and accountability: understanding how an algorithmic system generally functions and clarifying how it reaches individual outcomes. To this end, the Association for Computing Machinery (2017: 2) proposes seven key principles for algorithmic transparency and accountability:

- “Awareness: owners, designers, builders, users, and other stakeholders of analytic systems should be aware of the possible biases involved in their design, implementation, and use and the potential harm that biases can cause to individuals and society.

- Access and redress: regulators should encourage the adoption of mechanisms that enable questioning and redress for individuals and groups that are adversely affected by algorithmically informed decisions.

- Accountability: institutions should be held responsible for decisions made by the algorithms that they use, even if it is not feasible to explain in detail how the algorithms produce their results.

- Explanation: systems and institutions that use algorithmic decision-making are encouraged to produce explanations regarding both the procedures followed by the algorithm and the specific decisions that are made. This is particularly important in public policy contexts

- Data provenance: a description of the way in which the training data was collected should be maintained by the builders of the algorithms, accompanied by an exploration of the potential biases induced by the human or algorithmic data gathering process. Public scrutiny of the data provides maximum opportunity for corrections. However, concerns over privacy, protecting trade secrets, or revelation of analytics that might allow malicious actors to game the system can justify restricting access to qualified and authorised individuals.

- Auditability: models, algorithms, data, and decisions should be recorded so that they can be audited in cases where harm is suspected.

- Validation and testing: institutions should use rigorous methods to validate their models and document those methods and results. In particular, they should routinely perform tests to assess and determine whether the model generates discriminatory harm. Institutions are encouraged to make the results of such tests public.”

Complementing these principles, emerging integrity frameworks for public administration emphasise that (see Jenkins 2021; Kossow et al. 2021):

- people have to be informed when they interact with or are subject to an AI system

- individuals affected by an AI decision must be helped to understand its outcome

- those who are affected by an AI system decision should be able to challenge the outcome

Köbis et al. (2022b) further stress the importance of data and code transparency, routine model audits, ethics training for data scientists and strengthened whistleblowing mechanisms to minimise the risk that AI tools are misused or even used themselves to perpetrate corrupt acts.

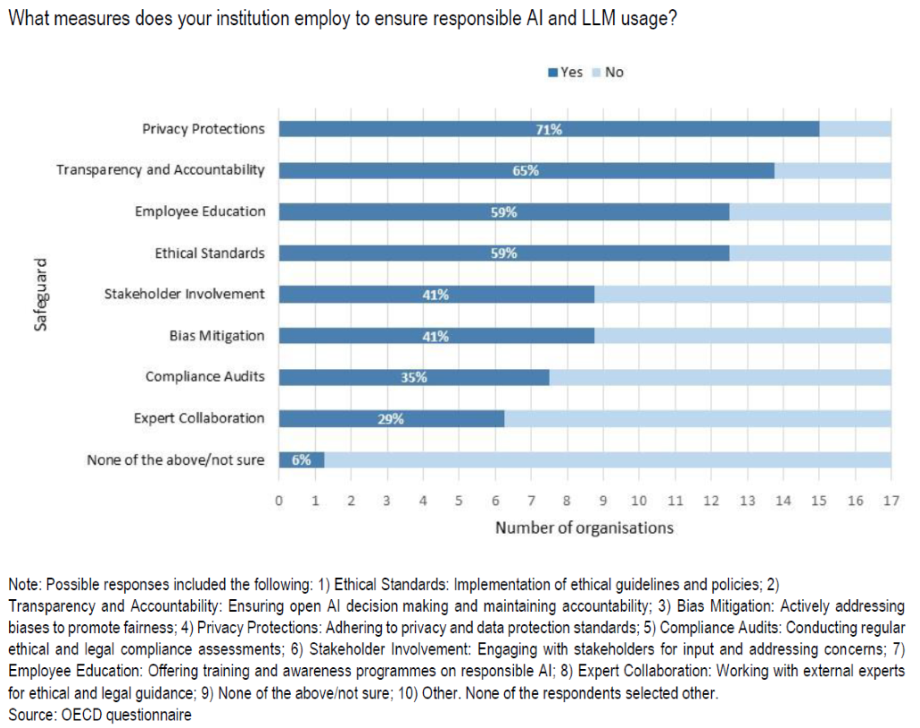

There is some limited emerging evidence of responsible AI practices beginning to be deployed in certain countries. An OECD survey of integrity actors (such as anti-corruption agencies, supreme audit institutions and internal audit bodies) on their use of gen AI found that most of the institutions surveyed already employ privacy protections, transparency and accountability measures and employee education as central safeguards for ethical use of AI tools and LLMs (Ugale and Hall 2024: 35) (see Figure 2).

Figure 2. Reported safeguards for ensuring responsible use of AI and LLMs.

Source: Ugale and Hall 2024: 35.

As Gerli (2024) notes, there is currently a broad scholarly consensus that AI should complement existing anti-corruption efforts rather than replace them, and that the overall ecosystem in which AI-driven solutions are designed, developed and implemented is critical for its success, as will be discussed in the following section (Etzioni and Etzioni 2017; Köbis et al. 2022a).

Addressing institutional and regulatory gaps

Without a broader institutional framework, it is difficult to ensure that AI systems for anti-corruption are procured, designed and used in a responsible manner (Gerli 2024). Over the past five years in particular, new standards and pieces of legislation specifically addressing AI have emerged (see Box 4).

Box 4. Standards and principles for the development and deployment of AI systems in public administration

There is a growing body of international standards aimed at ensuring the responsible use of AI in public administration, with a focus on accountability and public integrity (Ubaldi and Zapata 2024; OECD 2025). In parallel, an increasing number of countries is adopting national level regulations and principles (Ugale and Hall 2024; Maslej et al. 2025; UK Government 2025). These frameworks seek to mitigate risks of bias, opacity, misuse and lack of accountability by embedding transparency, human oversight, explainability and rights protection into AI systems (OECD 2019).

The OECD Principles on Artificial intelligence (2019, updated in May 2024) were the first intergovernmental standards on AI, setting out five core principles for policy makers and AI actors:

- inclusive growth, sustainable development and well-being;

- human rights and democratic values, including fairness and privacy;

- transparency and explainability;

- robustness, security and safety; and

- accountability.

For example, the transparency and explainability principles require AI actors to provide meaningful information about AI systems – their data sources, logic, and limitations – so that those affected can understand and challenge automated decisions (OECD 2019). Accountability further requires traceability of datasets, processes and decisions (OECD 2019).

The EU AI Act (2024) is the world’s first comprehensive framework for AI regulation (European Parliament 2023; Elbashir 2024). It establishes obligations for AI systems based on risk levels, distinguishing between four risk levels (Ubaldi and Zapata 2024: 16):

- unacceptable risk: prohibited uses (e.g. predictive policing, social scoring or assessing the risk of an individual committing criminal offences)

- high-risk: uses with significant potential harm to health, safety, democracy (e.g. most public sector applications), which therefore require establishing a risk management system, data governance, compliance documentation and fundamental rights impact assessments, among other requirements)

- limited risk: applications such as chatbots and deep fakes, where transparency obligations require informing users that they are interacting with AI

- minimal risk: systems which require code of conduct, such as video games.

These measures prioritise transparency obligations to ensure AI systems’ accountability and explainability (Elbashir 2024).

At the national level, several initiatives complement these frameworks. In 2025, the UK government published its artificial intelligence playbook, which sets out ten principles for safe, responsible and effective use of AI in government organisations. In January 2024, the Netherlands became one of the first countries to publish a strategy specifically focused on gen AI, outlining a vision for using gen AI in the public sector (Ugale and Hall 2024: 14). These principles include developing and applying gen AI in a safe way, developing and applying it equitably, ensuring that it serves human welfare and safeguards human autonomy, and that it contributes to sustainability and prosperity (Ugale and Hall 2024: 14).

Other countries have advanced oversight mechanisms for the deployment and use of AI systems in the public sector: Norway’s office of the auditor general began auditing central government AI use in 2023; France published a guide on algorithmic transparency; Ireland issued guidelines for AI in the public service; and the Netherlands developed governance guidance for responsible AI applications (Ubaldi and Zapata 2024: 15, 19).

Procurement of AI systems also requires clear standards. A recent Transparency International (2025: 10) paper addresses corrupt uses of AI and recommends that:

- authorities should publish plans for establishing or procuring AI systems in advance, with information on the purpose of those systems

- contracting authorities should require suppliers to demonstrate transparency (e.g. by making source code available to independent experts for periodic inspections)

- contracting authorities should have in place impact assessments and audit systems to reduce risks of misusing AI systems for private gain

Capacity building challenges

Alongside ensuring transparent and accountable deployment of AI in the anti-corruption field and developing the necessary regulatory and institutional frameworks, it is equally important to address capacity building challenges (OECD 2025).

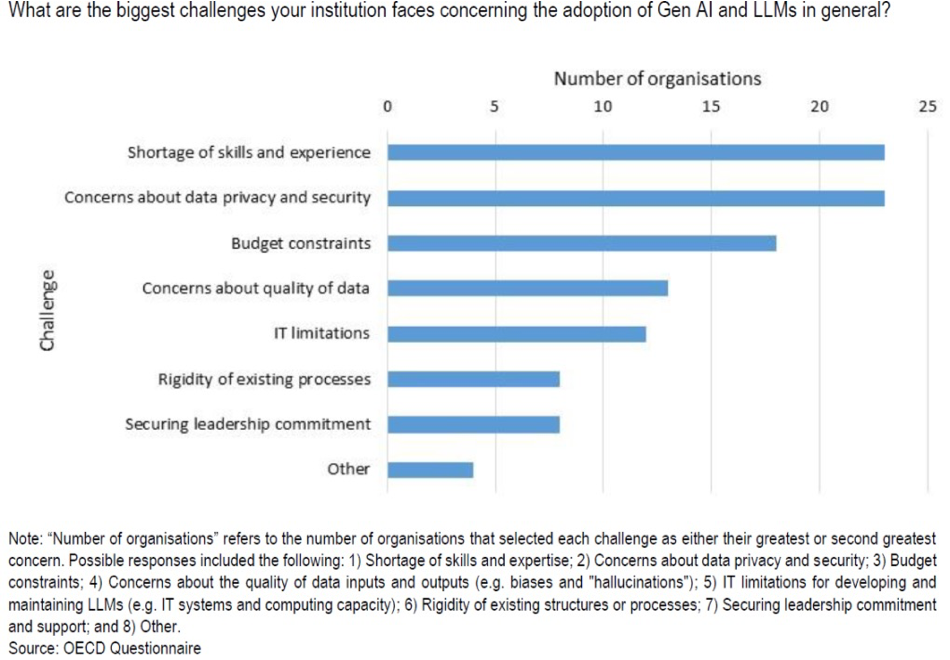

A recent OECD survey of integrity actors found that a shortage of skills and experience ranked as the most pressing concern in implementing gen AI and LLMs, followed by concerns over data privacy and budget constraints (Figure 3).

Figure 3. Key perceived challenges for the adoption of gen AI and LLMs in the public sector.

Source: Ugale and Hall 2024: 26.

In relation to this, another pressing challenge is the mismatch between AI tools and institutional capacity, either because agencies lack the people/skills to use them effectively or because the tools themselves fail in ways that carry legal consequences. Brazil’s Alice (Annex 1, Example 5) shows the first problem: chronic staff shortages left auditors overloaded with risk-alert emails, unable to triage everything, so potential red flags went unreviewed (Odilla 2023). The example from the UK Serious Fraud Office illustrates the second: AI-assisted disclosure platforms (used to sift tens of millions of documents and identify legally privileged information at far lower cost compared to non-AI alternatives) later omitted material due to formatting/encoding issues, forcing reconfiguration and raising concerns that historical searches – and therefore disclosure in past cases – may have been incomplete (Ring 2024, 2025; Fisher 2025). In investigative settings, such failures can jeopardise prosecutions and undermine trust.

Some of these challenges can be addressed with careful planning from the onset and by learning from experiences of other countries. For instance, the findings of a recent OECD survey of using gen AI and LLMs in the public sector for anti-corruption and integrity suggest several ways of piloting and scaling gen AI initiatives (Ugale and Hall 2024: 29):

- start with incorporating gen AI into lower risk areas and processes (e.g. writing document summaries) as such an approach can help with capacity building in areas where mistakes are not that costly

- IT requirements need to be considered for both piloting and scaling AI initiatives, including computational and storage capacities, the availability of high-performance computing power, and data storage and data management capabilities

Further, the initiative with data and AI-driven audits in TdC in Portugal suggests the importance of strengthening data literacy and digital skills of TdC staff to ensure a successful implementation of these audits. This means that users of audit risk models need to undergo continuous training on how to use these tools, understand the outputs and interpret the results, as well as to be aware of any changes (e.g. introduction of new risk indicators) (Hlacs and Wells 2025: 23).

Annex 1: Examples of AI ACTs in corruption prevention

Example #1: Identifying strategies for limiting competition in public contracting (Katona and Fazekas 2024)

|

AI technology applied |

Machine learning (logistic regression, random forest, XGBoost models) and classical NLP techniques. |

|

Developed by/deployed by |

Academics (Katona and Fazekas 2024). |

|

Datasets used |

All published government tenders in Hungary between 2011-2020 (approx. 119,000 contracts). |

|

Main objectives |

To show the relevance of textual information in bidding conditions, product descriptions and assessment criteria for predicting single-bidding in otherwise competitive markets. |

|

Main benefits |

Introducing contract-related textual information improved the model accuracy from 77% to 82% compared to their models containing only structured variables (e.g. no publication of the tender call). |

|

Main challenges |

A high rate of missing data, which is likely due to a lot of text being included in the full tender documents, rather than the official tender announcements. The authors used the latter as the first source, which had an irregular structure and contained difficult-to-process formats (i.e. scanned PDFs) (see Katona and Fazekas 2024). The study relies on only one proxy of corruption risk: single-bidding. |

Example #2: Identifying politically connected firms (Mazrekaj et al. 2024)

|

AI technology applied |

Machine learning (logistic regression, ridge regression, lasso, random forests and random forests with boosting). |

|

Developed by/deployed by |

Academics (Mazrekaj et al. 2024). |

|

Datasets used |

The dataset included a population of firms registered in the Czech Republic in 2018 (254,367 firms) filled with information on political donations, donating board members and those running for political office (from domestic sources and Orbis company database). |

|

Main objectives |

To show how machine learning techniques can be used to predict political connections. |

|

Main benefits |

Machine learning models accurately predict over 85% of politically connected firms based only on firm-level financial and industry indicators, suggesting the potential of ML for public institutions to identify firms whose political connections may represent conflicts of interest (Mazrekaj et al. 2024). |

|

Main challenges |

They use easily interpretable machine learning algorithms (i.e. random forests) rather than “black-box” models like neural networks. The trade-off is that the latter have even greater predictive accuracy, at the expense of explainability (Mazrekaj et al. 2024). |

Example #3: Identifying public procurement cartels (Fazekas et al. 2023)

|

AI technology applied |

Machine learning (logistic regression, random forests and gradient boosting machines). |

|

Developed by/deployed by |

Academics (Fazekas et al. 2023). |

|

Datasets used |

Data for 78 cartels in 7 countries between 2004-2021. Public procurement datasets from Bulgaria, France, Hungary, Latvia, Portugal, Spain and Sweden for the 2007-2020 period. |

|

Main objectives |

To test the predictive power of machine learning models to detect cartel behaviour in public contracting. |

|

Main benefits |

The models demonstrate that no single indicator – or even a small set of indicators – can reliably predict cartel behaviour. Instead, combining multiple indicators yields accuracy rates of 77–91% in predicting cartels across different countries. These models have clear policy relevance: they can support investigations and guide preventive interventions. For instance, given the finding that one-third of procurement markets in the seven selected countries are at high risk of cartelisation, the results could inform the design of preventive policies aimed at removing barriers to competition in these high-risk markets (Fazekas et al. 2023). |

|

Main challenges |

There are challenges with data quality of public procurement data (large amount of missing data and of certain fields, such as the losing bidder and bid price information). Learning models require adaptation and improvement with new learning materials (e.g. latest investigative results) (Fazekas et al. 2023: 27) to keep up with cartels changing their behaviour and tactics. |

Example #4: Predicting cartel participants in public procurement contracting (Huber and Imhof 2023)

|

AI technology applied |

Deep learning (CNNs) computer vision approach. |

|

Developed by/deployed by |

Academics (Huber and Imhof 2023). |

|

Datasets used |

Labelled procurement data from Japan and Switzerland with known episodes of cartel activity and competitive periods. These data allow the authors to build many pairwise “bid-image” samples for training and testing the CNN. |

|

Main objectives |

Test whether CNNs can reliably flag cartel participants from bidding patterns. |

|

Main benefits |

The CNNs on average correctly classify 19 out of 20 firms as cartel members or competitive bidders (Huber and Imhof 2023: 9). CNNs reach a high accuracy of 95% on average for both Japanese and Swiss bid rigging cases. |

|

Main challenges |